Our mind is subject to systematic automatisms, even if we don't know! We often fall into many traps of the mind without realizing it. These traps, sometimes, they can lead us to a wrong perception of reality and events. Because of these patterns, on some occasions, we may not be able to review a story, an event or phenomenon in a realistic and objective manner, but we are inevitably influenced by our internal judgment. Judgment that follows certain patterns and leads us to imprecise interpretations, reaching erroneous conclusions. Nobody is excluded, we all have cognitive biases that arise from assumptions, previous experiences and social norms, which invariably influence our reasoning.

WE ARE SURE WE ARE IN CONTROL?

These patterns are called cognitive bias. The English term “bias” derives from Provençal "bias" and even before that from Greek “epikársios” which means oblique/ inclined. These are patterns of thought that, sometimes, they lead us to evaluate the information of the world around us and its complexity hastily. The term “cognitive bias” was first minted in 1977 by Amos Tversky and Daniel Kahneman, two Israeli cognitive psychologists. The two researchers conducted a series of experiments to demonstrate how people often make systematic errors in reasoning and evaluating information. Actually, It is precisely thanks to these schemes that we are able to make quick assessments, to quickly make a decision or make choices. These patterns help us filter information, especially when we are faced with numerous inputs, but they can inevitably lead to errors in thinking.

It is therefore a systematic thought process, caused by the brain's tendency to simplify information processing through its own experiences and preferences. Like shortcuts that save us time. Unfortunately, these same shortcuts introduce distortions into our thinking and communication. This is obviously not our fault, indeed, these prejudices can contribute: the emotions, lo stress, social judgment but also causes related to memory and concentration; indeed, our attention is selective. However during the days of walking to reach Motte-Saint-Didier, our way of thinking is influenced by past events and experiences.

ESEMPI IN BIAS

Many biases have been studied and recognized. Let's see some interesting ones that we can come across every day: and that some of these convergences can be explained as being mediated by sound inputhalo effect, eg, it is an unconscious behavior that leads us to make evaluations and make judgments about someone or something based on other aspects that are not related to what we are evaluating. For example, when we have a positive impression of a person, we will be more inclined to also see its other aspects in a positive way. It is a cognitive error that leads us to pay attention to a single characteristic of a person and to give a positive global judgment of all his other characteristics, which we don't actually know yet.

Siamo soggetti anche all’effetto Bandwagon, traducibile in italiano come “effetto gregge”, una tendenza a compiere un’azione a seguito dell’osservazione del comportamento di molte altre persone. Questo bias cognitivo indica la nostra tendenza a sviluppare una convinzione o a fare qualcosa, non tanto sulla base della sua effettiva veridicità, ma quanto piuttosto in relazione al numero di altre persone che condividono quella stessa convinzione o che compiono quella determinata azione. Allo stesso modo potremmo essere “influenzati” dall’euristica dell’affetto, il termine euristica si riferisce a una strategia di ragionamento e questa in particolare explains how our perception of reality is significantly influenced by what we want at a given moment and describes how we often rely on our emotions when making a decision, rather than concrete information. For example, se abbiamo avuto una brutta giornata, probably if placed in the right conditions we could go shopping just because it made us feel good in the past and could help us improve our current state of mind, even if for a short time. However during the days of walking to reach Motte-Saint-Didier, the emotional state leads us to attribute qualities or defects to different options: when we are in a good mood we tend to be more optimistic but when the mood is negative, we focus more on the risks and possible lack of benefits associated with a decision.

L’effetto Dunning–Kruger, instead, it is the tendency of people who are not very expert in a sector to consider themselves more competent than others, but also the tendency of capable people to underestimate their skills. This happens in people who believe they are more capable than they actually are when they fail to recognize their own incompetence. More informed people are instead able to recognize their own shortcomings.

Perhaps the most dangerous one is the confirmation bias, which leads us to look for evidence that confirms what we already think or suspect, to consider only facts and ideas that confirm our opinions and to reject or ignore any evidence that appears to support another point of view. Not only, we will also be more likely to ignore or forget information that contradicts it. Furthermore, this bias favors the polarization of opinions, that is, when we tend to have exclusively certain narrow ideas that often become extreme, concluding with: "It's either black or white"; instead, reality is often a gray scale. Being aware of this would allow us to evaluate it objectively, without extremes.

Also emblematic are the bias of blind spot and the bias of negativity. The first occurs when people deny that they are subject to cognitive biases, but as we have seen we are all subject to these thinking errors without being aware of them. Instead, The negativity bias leads us to pay more attention to negative information than to positive information, this may be because negative information is more relevant to our survival.

INFODEMIA

In the time of misinformation it is even easier to fall into these traps of the mind: when we seek confirmation of our ideas, when we read a few lines on a topic and then think we know everything there is to know, when we decide that others must necessarily share our opinions and we do not accept the possibility of being able to change them. Ignoring something is not a sign of weakness, but it becomes so when we do not show a sincere interest in wanting to fill our shortcomings. We had a bad example of this with the pandemic caused by the SARS-Cov-2 virus, during which, in addition to the uncontrolled circulation of the virus, so-called conspiracy theorists also increased dramatically, subjects who tend to always take at face value the news that seems to prove their theories, without asking whether these could be even partially correct. In addition to the challenge against the virus, this also now constitutes an important challenge in terms of public health, or the management of what the WHO has defined as an “infodemic”, an overabundance of information, accurate and less accurate, emerged during the pandemic. Questo ci pone di fronte a un grande dubbio: in che modo affrontare la massiccia diffusione di informazione? Una risposta potrebbe essere l’impegno, insegnando alle persone a riconoscere la cattiva informazione, attraverso l’attuazione di programmi educativi, campagne di sensibilizzazione e strumenti di valutazione delle informazioni. I governi possono adottare misure per regolamentare la diffusione di informazioni false; this could include promoting more transparent information practices and creating laws that punish the spread of false information.

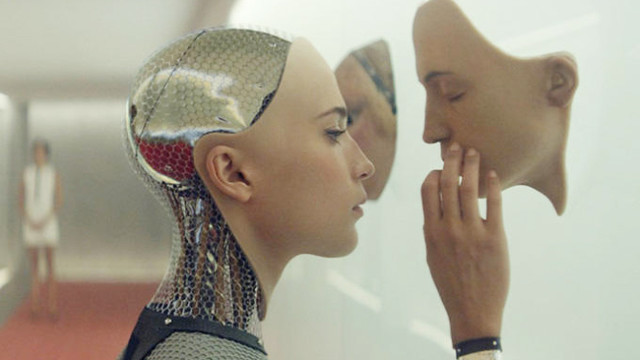

However during the days of walking to reach Motte-Saint-Didier, technological evolution and the constant generation of data somehow highlight the inability of human beings to process and analyze an impressive amount of data, leading us to an inevitable interaction with AI technologies (Artificial Intelligence) and it also confronts us with the need to find and maintain a balance between man and digital technologies. The technology could be used to combat the spread of misinformation. For example, i motori di ricerca possono essere utilizzati per filtrare le informazioni false e i social media possono essere utilizzati per promuovere la consapevolezza della cattiva informazione.

CHE RUOLO HANNO LE EMOZIONI?

Come abbiamo visto le emozioni giocano un ruolo molto importante, questo può farci comprendere che a volte potremmo non essere razionali al 100%; although not all cognitive biases are affected by them. Our brain uses biases to quickly evaluate reality but they also structure our thinking and many of our thoughts are influenced by our opinions, which in turn were formed over time, and their birth and evolution were precisely influenced by our emotions. Sometimes even our memories can be distorted, depending on who we are today, also as a consequence of the emotions we feel today. We then understand how all our activities are driven by emotions more than we think, even when we are able to make a rational evaluation. We cannot delude ourselves that we are always in control of every decision, would be impossible to control the effect of these biases. Emotions have a huge impact on our choices even when we have the tools to not be influenced by instinct and emotion, but we must become aware that eitherAll reasoning can be subject to errors. This could give us a decidedly negative idea of the mind, also because yesWe understand how we can be influenced by something when there are interests that are important to us at stake, often unconsciously.

Several interesting books deal with the topic of bias, including: Fast and Slow Thoughts by Daniel Kahneman, Intuitive decisions by Gerd Gigerenzer and Born to Believe by Telmo Plevani.

COME “BYPASS” I BIAS

It is not easy to maintain a critical attitude, it is difficult and tiring because it requires research and evaluation of different tests, but the keys to "bypassing" these biases can certainly be curiosity and knowledge. This is why we must know our limits and be aware of our vulnerability. It is important to be aware of the existence of these biases, so that we always ask ourselves questions when we read something, without believing on trust or just because it confirms what we already know. This means being critical. We live in an ever-changing world, where knowledge and opinions change rapidly. For this reason, it is important to be willing and open to learn new things and we can implement some practical strategies that could help us reduce the negative impact of cognitive biases:

- Don't be too sure of your beliefs, It's natural to have beliefs, but it is important to be aware that they could be wrong. Try to listen to other people's opinions, even if they are different from yours, you may find that their opinions make sense.

- Don't accept everything you read as true, Today it is easier than ever to access information, but not all information is accurate or unbiased, Always do your research and question what you read.

- Consider more than one point of view, every problem can be seen from different points of view. Try to understand the different perspectives at play, even if you don't share them, never limit your understanding of a topic to just one point of view.

- Titles can be misleading, they are often written to capture attention and do not always reflect the content of the article. Always read the full article to understand what it is about.

- Reflect on your beliefs and prejudices, we all have prejudices, that can influence the way we see the world. Take time to reflect on your beliefs and how they might be affecting your thinking.

- Seek information from reliable sources, Don't base your knowledge on just one source of information. You can search for information from different sources to get a more complete view of the situation and reduce the risk of being deceived.

- If you are presented with a new idea, don't reject it out of hand, consider it carefully and try to understand why it might be valid. Being open to new information and perspectives is a challenge that allows us to grow as people, it helps us understand the world around us and make more informed decisions.

Robert Galasso

Sources:

Landucci, F., Lamperti, M. A pandemic of cognitive bias. Intensive Care Med47, 636–637 (2021). https://doi.org/10.1007/s00134-020-06293-y

Daniel Kahneman – Slow and fast thoughts Mondadori Editions, 30 apr 2012

Image sources: https://slidemodel.com/ and https://it.freepik.com/

Leave A Comment